[ad_1]

Image: Google

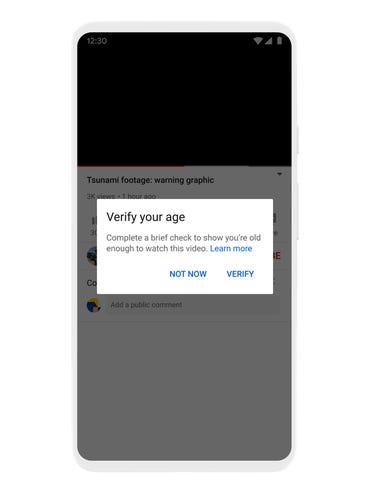

Google has announced it will be expanding age verification checks to users in Australia who want to access age-restricted content on YouTube and Google Play.

In the coming month, the search giant will introduce age verification checks where users are asked to provide additional proof-of-age when attempting to watch mature content on YouTube or downloading content on Google Play.

The move is to provide users with “age appropriate experiences,” Google government affairs and public policy senior manager Samantha Yorke explained in a blog post.

“As part of this process some Australian users may be asked to provide additional proof of age when attempting to watch mature content on YouTube or downloading content on Google Play.

“If our systems are unable to establish that a viewer is above the age of 18, we will request that they provide a valid ID or credit card to verify their age.”

Google considers a valid ID as one issued by government, such as a driver’s licence or passport.

The company assured if a user uploads a copy of their ID, it would be “securely stored, won’t be made public, and would be deleted” once a person’s date of birth is verified.

It noted, however, that it will not only use a person’s ID to confirm their age but also to “improve our verification services for Google products and protect against fraud and abuse”.

Google said the move is in response to the Australian government’s Online Safety (Restricted Access Systems) Declaration 2022, which requires platforms to take steps to confirm users are over the age of 18 before they can access content that could potentially be inappropriate for under-18 viewers. The declaration was introduced under the Online Safety Act.

See also: eSafety thinks identity verification for social media would be impractical

Similar age verification steps have already been implemented in the European Union under the Audiovisual Media Services Directive (AVMSD).

To ensure the experience is consistent, viewers who attempt to access age-restricted YouTube videos on “most” third-party websites will be redirected to YouTube to sign-in and verify their age to view it.

“It helps ensure that, no matter where a video is discovered, it will only be viewable by the appropriate audience,” Yorke said.

Meanwhile, Meta is rolling out parental supervision tools on Quest and Instagram, claiming it will allow parents and guardians to be “more involved in their teens’ experiences”.

The supervision tool for Instagram will allow parents and guardians to view how much time their teens spend on the platform and set time limits; be notified when their teens shares they’ve reported someone; and view and receive updates on what accounts their teen follow and the accounts that follow their teen.

There are also plans to add additional features, including letting parents set the hours during which their teens can use Instagram and the ability for more than one parent to supervise a teen’s account.

The supervision tool on Instagram is currently available only in the US, but Meta says there are plans for a global rollout in the “coming months”.

Teens will need to initiate Instagram parental supervision for now in the app on mobile devices, Meta said, but it explained parents would have the option to initiate supervision in the app on the desktop by June.

“Teens will need to approve parental supervision if their parent or guardian requests it,” Meta said.

As for the VR parental supervision tools being introduced to Quest, it will be rolled out over the coming months, starting with the expansion of the existing unlock pattern on Quest headsets to allow parents to use it block their teen from accessing experiences they deem as inappropriate.

In May, Meta will automatically block teens from downloading IARC rated age-inappropriate apps, as well launch a parent dashboard, hosting a suite of supervision tools that will link to the teen’s account based on consent from both sides.

Additionally, Meta has established what it is calling the Family Center to provide parents and guardians access to supervision tools and resources, including the ability to oversee their teens’ accounts within Meta technologies, set up and use supervision tools, and access resources on how to communicate with their teens about internet use.

“Our vision for Family Center is to eventually allow parents and guardians to help their teens manage experiences across Meta technologies, all from one central place,” the company said.

The moves from both tech giants follow the parliamentary committee responsible for conducting Australia’s social media probe releasing its findings earlier this week.

In its findings, it believes online harms would be reduced if the federal government legislates requirements for social media companies to set the default privacy settings for accounts owned by children to the highest levels and all digital devices sold in Australia to contain optional parental control functionalities.

Related Coverage

[ad_2]

Source link