[ad_1]

Image: Google

Google I/O 2022 is underway. During the event, we expect to hear from Google about new tools for developers, improvements included in Android 13 and maybe some new hardware. In the past, Google I/O’s opening keynote has also included presentations surrounding Chrome, Chrome OS, Android TV/Google TV and Google Assistant. We’ve also seen previews of futuristic technology during the keynote.

Below you’ll find everything Google has announced so far during the opening keynote.

Google Translate gets new languages

Image: Google

Alphabet CEO Sundar Pichai took to the stage to kick off Google I/O with a great joke by making sure his microphone wasn’t muted, after spending the last two years always starting his meetings on mute. Pichai then went into random product updates, giving highlights such as the number of air raid alerts Google has sent in Ukraine and noted that Google Translate is gaining 24 new languages.

Google Maps gets a new immersive view that makes street view look pretty lame

Pichai also demonstrated a new Immersive Mode in Google Maps that allows you to check out select cities that looks like Google Earth imagery combined with Street View, but on steroids. Not only can you check out cities, but you can even look at the inside of businesses. It looked pretty cool.

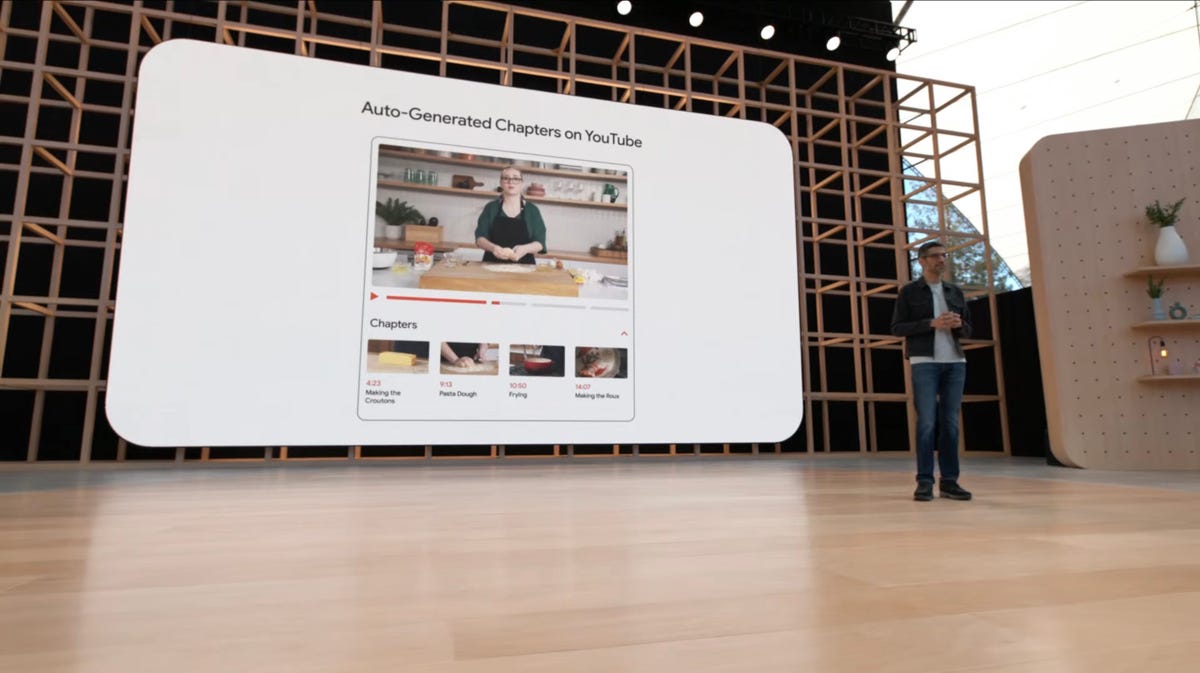

YouTube auto-generated chapters, transcriptions

Image: Google

Google has been able to auto-generate chapters for YouTube videos, but through improvements to the service, Google is planning to automatically add chapter markers to 80 million videos by the end of the year. Google will also roll out automatic transcriptions for videos, making it easier to read through the audio that’s in the video. This is also a big accessibility feature.

Google Docs gets auto-summary

Here’s a cool one: Google Docs will soon have a summary feature that gives you the cliff notes version of a long document so you can get the gist of what’s included in just a few seconds. This is especially helpful for those who often receive meeting notes or minutes and don’t want to wade through all of the fluff.

Google previews the future of Search

Image: Google

Search is arguably Google’s most important product, and during Google I/O the company demonstrated new tools and features that are coming to Search. For example, you can take a picture of a part you need for a home repair then add the term “near me” to the query, and Google will find that part, in stock at a local business. The same goes for taking a photo of a plate of food. Multisearch Near Me will launch, globally, later this year for English users.

Another feature coming to Search, and more specifically Google Lens, will allow you to scan an aisle in the grocery store and view an overlay of information about the products in front of you. The example used during the keynote was scanning a shelf of chocolate bars and trying to locate which items meet a specific criteria. Or looking for a specific bottle of wine or a fragrance-free bar of soap. This feature is called Scene Exploration and will launch later this year.

Google’s Real Tone initiative will move beyond the Pixel’s camera

Image: Google

Google’s Pixel 6 and Pixel 6 Pro cameras have a feature called Real Tone that’s designed to take accurate photos of people with darker skin tones. Using Monk’s Skin Tone Scale, Google is researching how the scale can be applied to other products. For example, Google has started to use the scale in Google Photos, and is adding the ability to sort Search results by skin tone.

A new Real Tone Filter for Google Photos will roll out later this year.

Google also announced it has open-sourced the skin tone scale, which you can view at skintone.google.

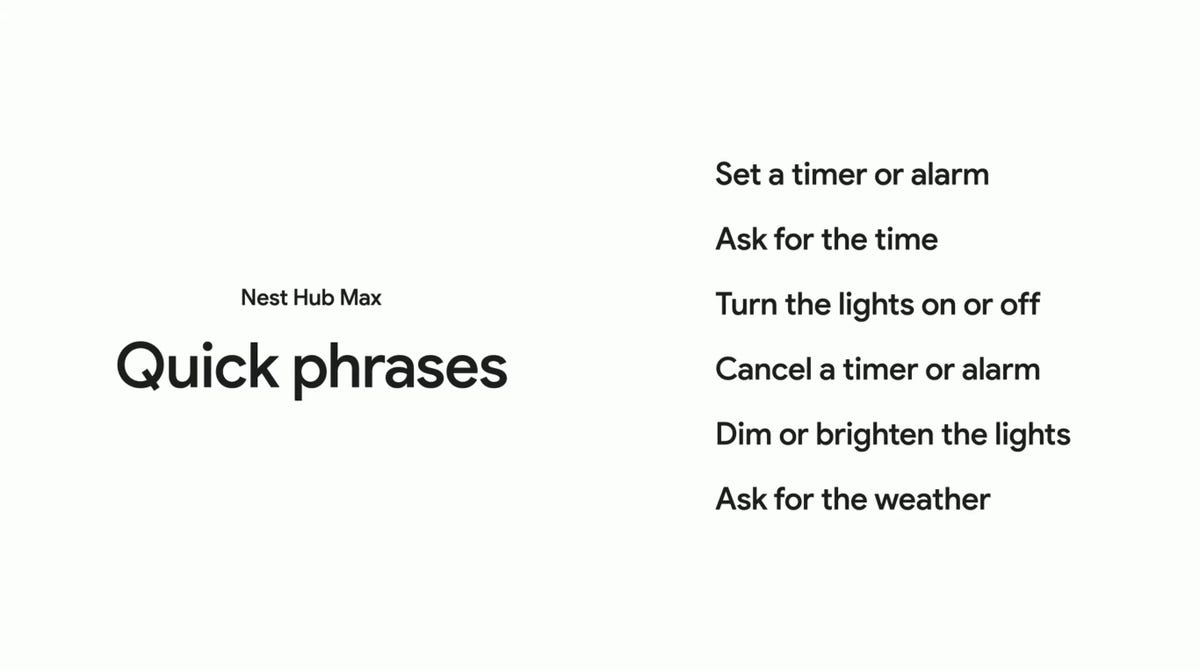

“Hey Google” is no longer required to activate Google Assistant

Image: Google

In order to make Google Assistant more accessbile you can use a new feature that’s rolling out starting today called Look and Talk. You simply need to look at the Nest Hub Max and start talking. You’ll see that Google Assistant is waiting for you to ask a question after looking at the screen. The demo went really well and was impressive.

Nest Hub Max is also expanding Quick Phrases to skip using Hey Google for controlling things like your Nest thermostat or turn off your lights.

Furthermore, Google Assistant will become more patient after being summoned. Instead of timing out and saying it doesn’t recognize what you’re asking, or trying to give an answer to the wrong question, Assistant will wait, and “encourage” you to finish your thought.

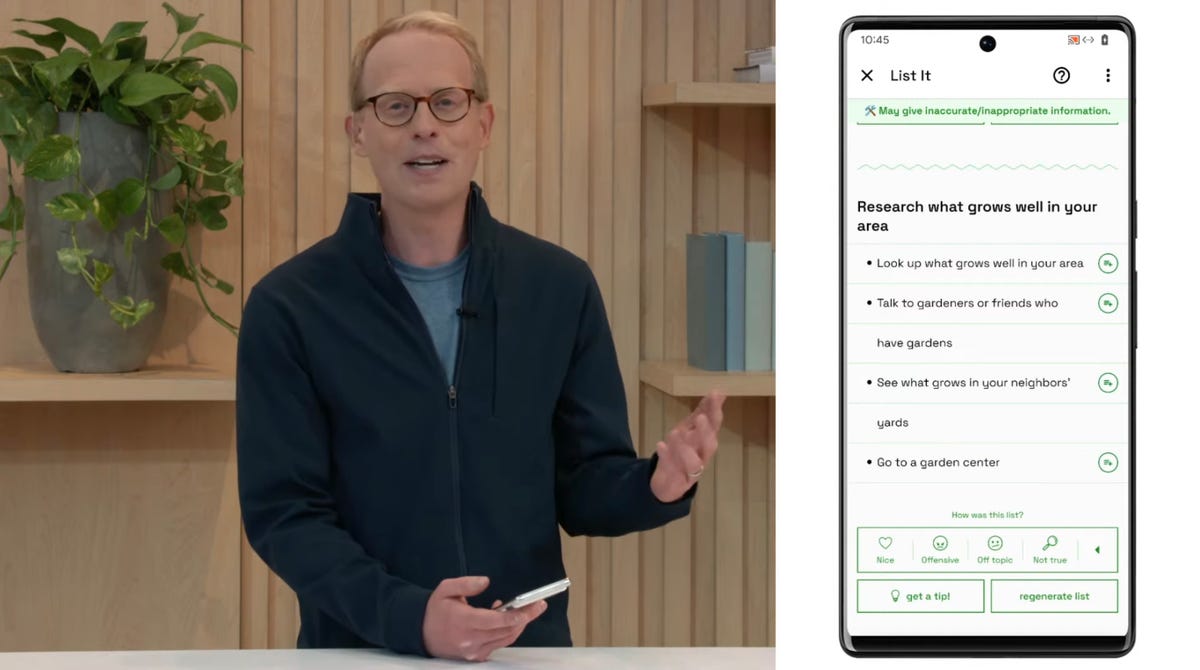

LaMDA 2 makes its debut in the AI Test Kitchen

Image: Google

Google’s LaMDA AI tool that’s built around having a conversation about random topics is getting its own AI Test Kitchen app. There are three ways for users to interact with LaMDA using one of three different modes: Imagine It, Talk About It and List It. Access to the app will be opened up over the coming months.

There was also a demonstration of another AI tool called Chain of Thought Prompting. I don’t understand much about training AI, but the demonstration Pichai walked us through did make it look like it’d be easier for AI to understand multiple questions that are related, but regarding different data sets.

New Privacy and Safety features for users, including virtual credit card numbers

Image: Google

Google has several new features coming to its suite of services that will make users’ data safer and ensure they can keep their private information private. For example, Android and Google Chrome users will soon be able to submit virtual credit card numbers when making online purchases, helping prevent fraud.

There’s also a new account safety feature that will alert users if there are additional steps they need to take to secure their account. The alert can be as simple as a reminder to enable two-step authentication. Google is also expanding its phishing scam scanning to Google Docs, Sheets and Slides.

If you find any of your personal information, such as a phone number, address or email address, in Google search results, there will soon be an automated method to request Google remove your information.

You can read more about the new privacy and safety features here.

[ad_2]

Source link