[ad_1]

AI chatbots have become valuable tools in assisting people with all of their text-based needs such as writing, coding, research, and more. However, they have typically been limited to text, until a recent shift towards multimodal inputs, and Bing Chat now joins the trend.

Also: How to use Bing Chat (and how it’s different from ChatGPT)

On Tuesday, Microsoft announced that Bing Chat will now accept images in its prompt inputs. This will allow users to upload images that they would like more information about, or that relate to the prompt in some way.

For example, if users see a painting and are wondering who painted it, they can simply upload a photo and ask Bing Chat who the painter is, and Bing Chat can now interpret the image and answer the question by leveraging GPT-4.

Also: This viral AI TikTok trend could score you a free headshot. Here’s how

When GPT-4 first launched in March, one of the biggest improvements of the model was its ability to process multimodal prompts, specifically images, and texts.

This Bing Chat integration is the first time GPT-4’s multimodal abilities have been integrated into a chatbot since even ChatGPT Plus users do not have access to this feature yet.

Microsoft suggested other fun uses for this feature including uploading a photo of the contents in your fridge and requesting a lunch idea or asking about the architecture of buildings in a new city.

Also: Bing Chat’s enterprise solution is here. This is what it can offer your business

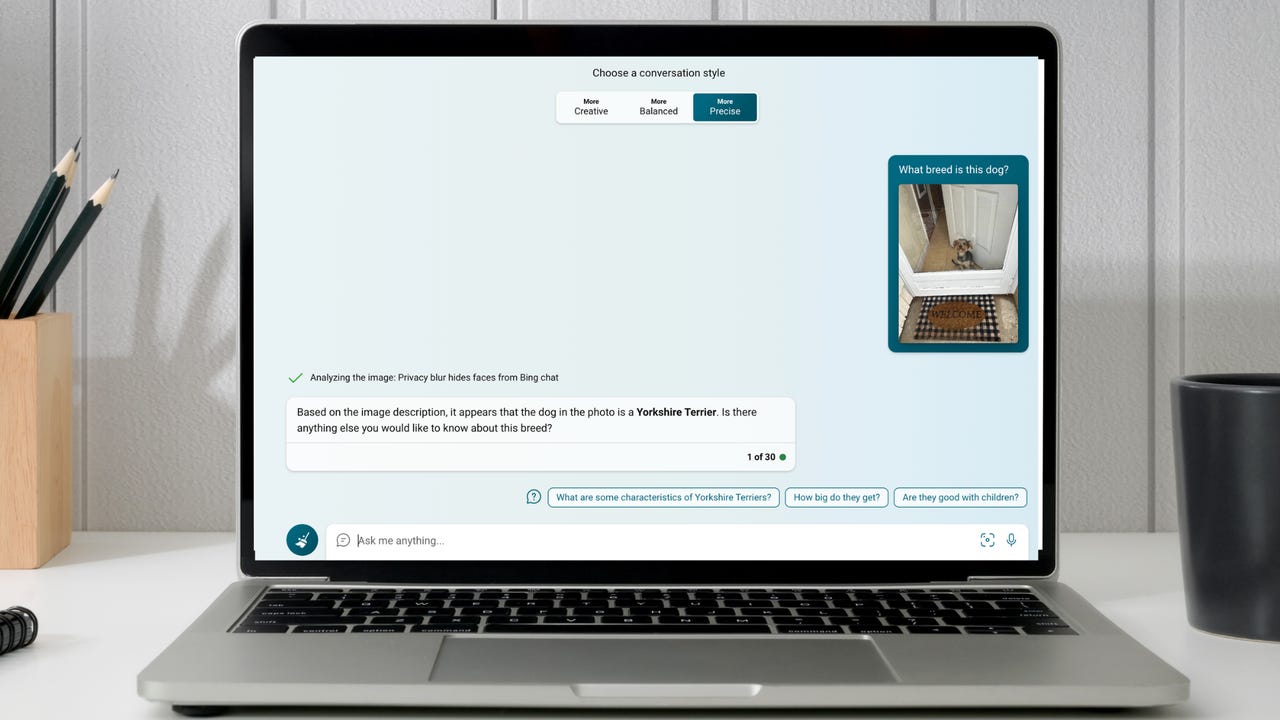

The feature is beginning to roll out to users today on the desktop and the mobile app. The feature already rolled out to me, so I put it to the test.

I uploaded an image of my puppy and asked Bing Chat what breed he was. Within seconds Bing Chat released a response that was accurate, as seen in the image at the top of the article.

This release follows Google’s addition of image inputs to its Google Bard chatbot last week through an integration with Google Lens.

[ad_2]

Source link