[ad_1]

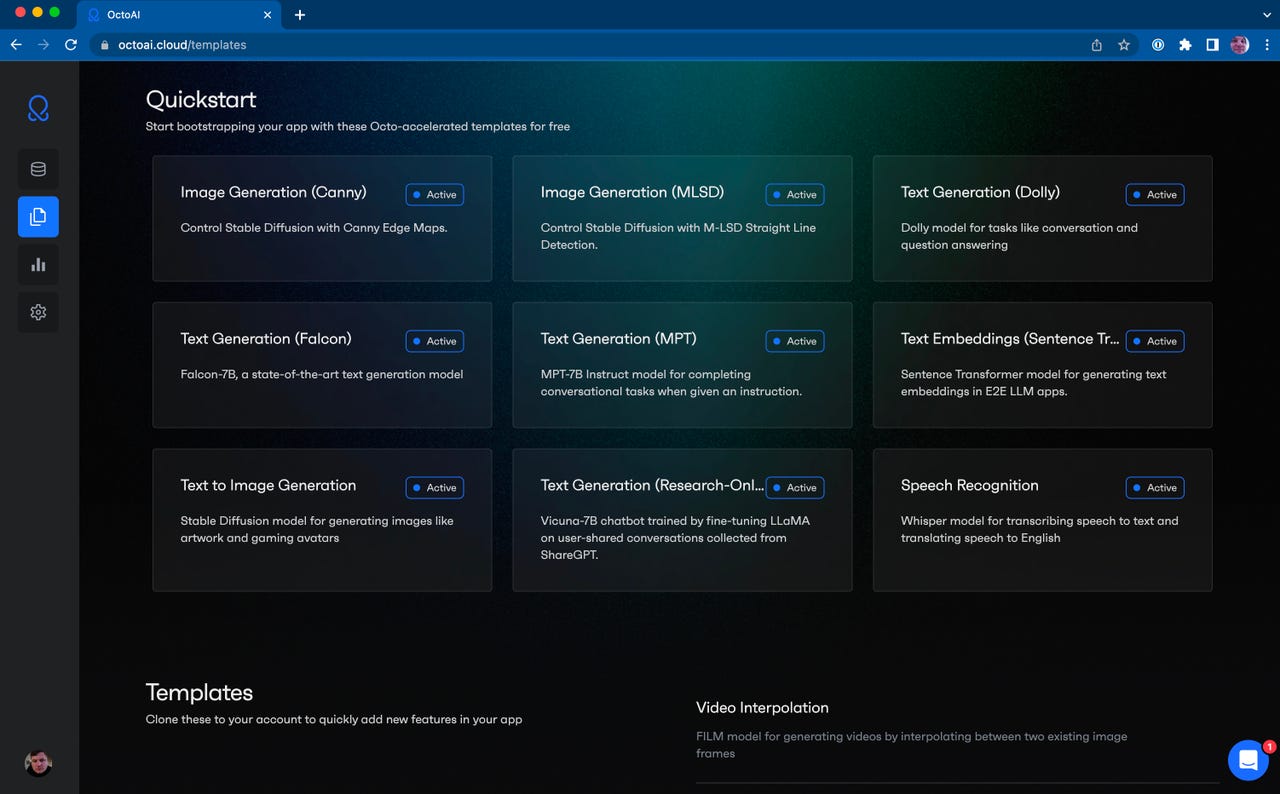

The OctoAI compute service offers templates to use familiar generative AI programs such as Stable Diffusion 2.1, but customers can also upload their own completely custom models. OctoML

The fervent interest in running artificial intelligence programs, especially of the generative variety, such as OpenAI’s ChatGPT, is producing a cottage industry of services that can streamline the work of putting AI models into production.

Also: 92% of programmers are using AI tools, says GitHub developer survey

On Wednesday, startup OctoML, which cut its teeth improving AI performance across diverse computer chips and systems, unveiled a service to smooth the work of serving up predictions, the “inference” portion of AI, called OctoAI Compute Service. Developers who want to run large language models and the like can upload their model to the service, and OctoAI takes care of the rest.

That could, says the company, bring a lot more parties into AI serving, not just traditional AI engineers.

“The audience for our product is general application developers that want to add AI functionality to their applications, and add large language models for question answering and such, without having to actually put those models in production, which is still very, very hard,” said OctoML co-founder and CEO Luis Ceze in an interview with ZDNET.

Generative AI, said Ceze, is even harder to serve up than some other forms of AI.

“These models are large, they use a lot of compute, it requires a lot of infrastructure work that we don’t think developers should have to worry about,” he said.

Also: OctoML CEO: MLOps needs to step aside for DevOps

OctoAI, said Ceze, is “basically a platform that typically would have been built by someone using a proprietary model” such as OpenAI for ChatGPT. “We have built a platform that is equivalent, but that lets you run open-source models.

“We want people to focus on building their apps, not building their infrastructure, to let people be more and more creative.”

The service, which is now available for general access, has been in limited access with some early customers four about a month, said Ceze, “and the reception has exceeded everyone’s expectations by a very wide margin.”

Although the focus of OctoAI is inference, the program can perform some limited amount of what’s known as “training,” the initial part of AI where a neural net is developed by being given parameters for a goal to optimize.

Also: How ChatGPT can rewrite and improve your existing code

“We can do fine-tuning, but not training from scratch,” said Ceze. Fine-tuning refers to follow-on work that happens after initial training but before inference, to adjust a neural network program to the particularities of a domain, for example.

“We want people to focus on building their apps not building their infrastructure, to let people be more and more creative,” says OctoML co-founder and CEO Luis Ceze. OctoML

That emphasis has been a “deliberate choice” of OctoML’s, said Ceze, given inference is the more frequent task in AI.

“Our work has been focused on inferencing because I’ve always had a strong conviction that for any successful model, you’re going to do a lot more inferencing than training, and this platform is really about putting models into production, and in production, and for the lifetime of models, the majority of the cycles go to inference.”

OctoML’s team is well-versed in the intricacies of putting neural networks into production given their central role in developing the open-source project Apache TVM. Apache TVM is a software compiler that operates differently from other compilers. Instead of turning a program into typical chip instructions for a CPU or GPU, it studies the “graph” of compute operations in a neural net and figures out how best to map those operations to hardware based on dependencies between the operations.

Also: How to use ChatGPT to create an app

The OctoML technology that came out of Apache TVM, called the Octomizer, is “like a component of an MLOps, or DevOps flow,” said Ceze.

The company “took everything we’ve learned” from Apache TVM and from building the Octomizer, said Ceze, “and produced a whole new product, OctoAI, that basically wraps all of these optimization services, and then full serving, and fine-tuning, a platform that enables users to take AI into production and even run it for them.”

Also: AI and DevOps, combined, may help unclog developer creativity

The service “automatically accelerates the model,” chooses the hardware, and “continually moves to what’s the best way to run your model, in a very, very turnkey way.”

Using CURL at the commend line is a simple way to get started with templated models. OctoML

The result for customers, said Ceze, is that the customer no longer needs to be explicitly an ML engineer; they can be a generalist software developer.

The service lets customers either bring their own model, or draw upon a “a set of curated, high-value models,” including Stable Diffusion 2.1, Dolly 2, LLaMa, and Whisper for audio transcription.

Also: Meet the post-AI developer: More creative, more business-focused

“As soon as you log in, as a new user, you can play with the models right away, you can clone them and get started very easily.” The company can help fine-tune such open-source programs to a customer’s needs, “or you can come in with your fully-custom model.”

So far, the company sees “a very even mix” between open-source models and their own custom models.

The initial launch partner for infrastructure for OctoAI is Amazon’s AWS, which OctoML is reselling, but the company is also working with some customers who want to use capacity they are already procuring from public cloud.

Uploading a fully-custom model is another option. OctoML

For the sensitive matter of how to upload customer data to the cloud for, say, fine-tuning, “We started from the get-go with SOC2 compliance, so we have very strict guarantees of mixing user data with other users’ data,” said Ceze, referring to the SOC2 voluntary compliance standard developed by the American Institute of CPAs. “We have all that, but we have customers asking for more now for private deployments, where we actually carve out instances of the system and run it in their own VPC [virtual private cloud.]”

“That is not being launched right away, but it’s in the very, very short-term roadmap because customers are asking for it.”

Also: Low-code platforms mean anyone can be a developer — and maybe a data scientist, too

OctoML goes up against parties large and small who also sense the tremendous opportunity in the surge in interest to serve up predictions with AI programs since ChatGPT.

MosaicML, another young startup with deep technical expertise, in May introduced MosaicML Inference, deploying large models. And Google Cloud last month unveiled G2, which it says is “purpose-built for large inference AI workloads like generative AI.”

Ceze said there are several things to differentiate OctoAI from competitors. “One of the things that we offer that is unique is that we are focused on fine-tuning and inference,” rather than “a product that has inference merely as a component.” OctoAI, he said, also distinguishes itself by being “purpose-built for developers to very quickly build applications” rather than having to be AI engineers.

Also: AI design changes on the horizon from open-source Apache TVM and OctoML

“Building an inference focus is really hard,” observed Ceze, “you have very strict requirements” including “very strict uptime requirements.” When running a training session, “if you have faults you can recover, but in inference, the one request you miss could make a user upset.”

“I can imagine people training the models with MosaicML and then bringing them to OctoML to serve up,” added Ceze.

OctoML is not yet publishing pricing, he said. The service initially will allow prospective customers to use it for free, from the sign-up page, with an initial allotment of free compute time, and “then pricing is going to be very transparent in terms of here is what it costs for you to use compute, and here’s how much performance we’re giving you, so that it becomes very, very clear, not just the compute time, and you’re getting more work out of the compute that you’re paying for.” That will be priced, ultimately, on a consumption basis, he said, where customers pay only for what they use.

Also: The 5 biggest risks of generative AI, according to an expert

There’s a distinct possibility that a lot of AI model serving could move from being an exotic, premium service currently to being a commodity.

“I actually think that AI functionality is likely to be commoditized,” said Ceze. “It’s going to be exciting for users, just like having spell checking or grammar checking, just imagine every text interface having generative AI.”

At the same time, “the larger models will still be able to do some things the commodity models can’t,” he said.

Scale means that complexity of inference will be a challenging problem to solve for some time, he said. Large language models of 70 billion or more parameters generate a hundred “giga-ops,” one hundred billion operations per second, for every word that they generate, noted Ceze.

Also: This new technology could blow away GPT-4 and everything like it

“This is many orders of magnitude beyond any workload that’s ever run,” he added, “And it requires a lot of work to make a platform scale to a global level.

“And so I think it’s going to bring business to a lot of folks, even if it’s commoditized, because we are going to have to squeeze performance and compute capabilities from everything we have in the cloud and GPUs and CPUs and stuff on the edge.”

[ad_2]

Source link