[ad_1]

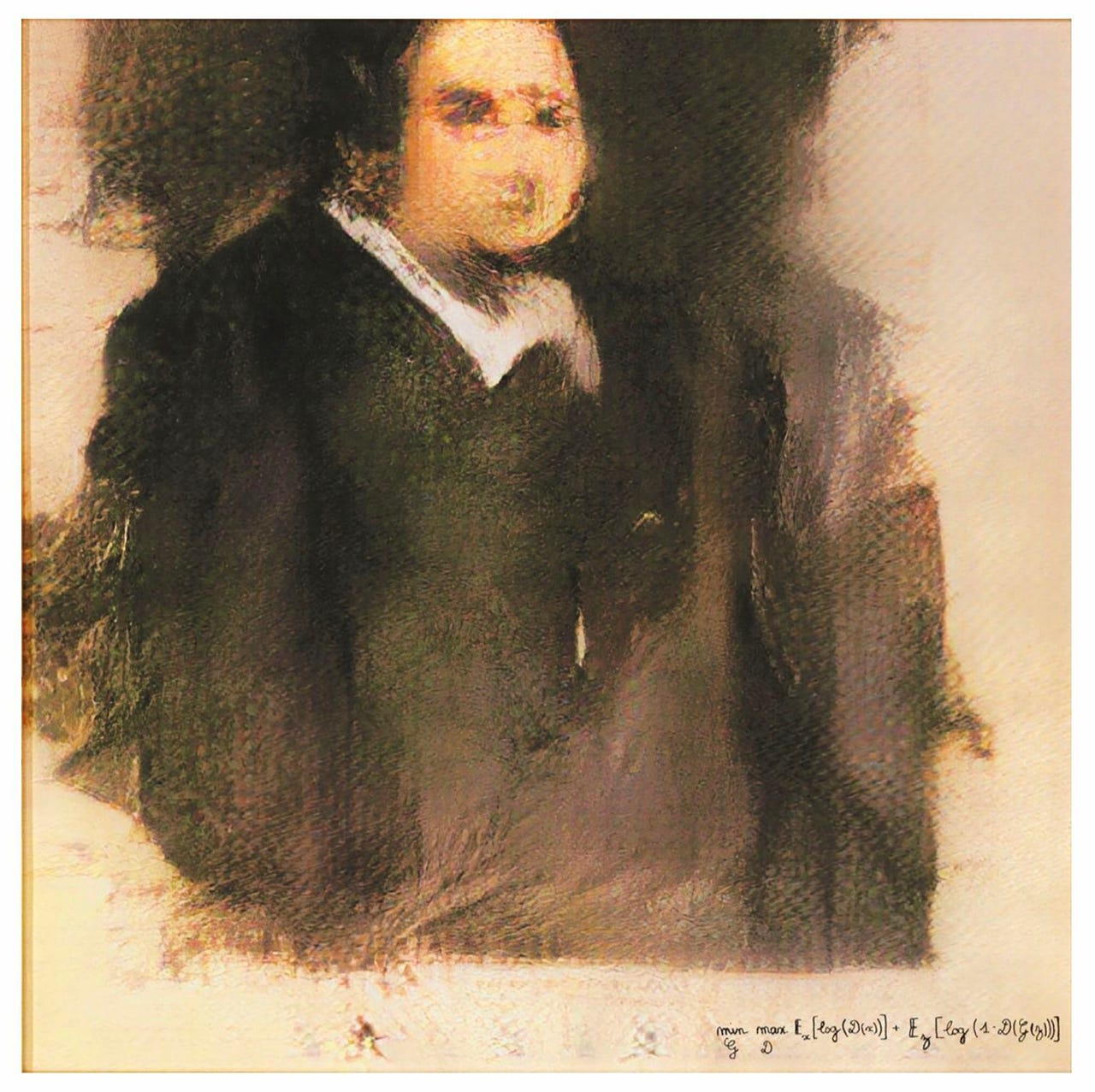

“Edmond de Belamy,” produced by the art group Obvious and auctioned at Christie’s in 2018 for $432,500, relied on generative adversarial network algorithms developed over years by various parties. The painting ingested tons of artwork samples from artists through the ages to become tuned to produce art of a certain style. MIT

It’s no secret people’s notions about artificial intelligence are generally ridiculous. They regularly impute a presence to what is really just a machine, much as Google former engineer Blake Lemoine last year claimed a chatbot, LaMDA, was “sentient.”

Even the reporters tasked with conveying an understanding of the technology often put forward hype instead of knowledge.

Now, in an attempt to combat some bad notions and focus attention on important questions of AI, a group of scholars Thursday warned that humans should disabuse themselves of the misconception that AI programs constitute an “artist.”best ai ZDNet

Also: What is AI? Everything to know about artificial intelligence

“The very term ‘artificial intelligence’ might misleadingly imply that these systems exhibit human-like intent, agency, or even self-awareness,” write Ziv Epstein, a post-doctoral researcher at the Massachusetts Institute of Technology Media Lab, and several colleagues from collaborating institutions, in an article in the prestigious journal Science.

The article, “Art and the science of generative AI,” was published online Thursday for the June 16 print edition of Science.

“Natural language-based interfaces now accompany generative AI models, including chat interfaces that use the ‘I’ pronoun, which may give users a sense of human-like interaction and agency,” the authors write.

The authors warn that imputing agency to an AI program could lead people to several follow-on false beliefs, such as failing to give credit to the human creator of the work.

“These perceptions can undermine credit to the creators whose labor underlies the system’s outputs and deflect responsibility from developers and decision-makers when these systems cause harm,” the authors write.

The article addresses multiple perspectives about AI that have become prevalent in recent years.

Also: The best AI chatbots: ChatGPT and other noteworthy alternatives

There have been some infamous art-world displays of “AI-generated art” in recent years that do, in fact, obscure the origins of a work’s production.

One of the most striking PR moments of the AI age was the sale by Christie’s auction house in October 2018, of a painting output by an algorithm, titled “Edmond de Belamy,” for $432,000. The painting was touted by the auctioneers, and the curators who profited, as “created by an artificial intelligence.”

At the same time, however, there is a thread of research stretching back many years in which computer scientists and artists have tried to fashion artworks with computers in ways that may produce quasi-random effects. That thread of inquiry seeks to discover the dividing line between human control and what the computer spontaneously produces that escapes human control.

Such programs are often grouped together as “generative art systems with intentionally unexpected output,” or sometimes referred to as “decentralized autonomous artists” or “autonomous art systems,” noted Epstein in an email to ZDNET.

Also: These are my 5 favorite AI tools for work

Examples of the generative programs, said Epstein, include Botto, Electric Sheep, Abraham, Meet the Ganimals, and Artificial FM.

Some of the programs use so-called “generative AI,” referring to such generative adversarial networks, or “GANs,” including Botto and Ganimals. But not all programs use that approach.

“Unlike direct input-output usage of generators, these often include systems that respond to input from a crowd, or some other data stream, and thus make outputs that are intentionally unexpected,” Epstein told ZDNET.

The generative movement has explored the line between human intent and spontaneous machine output.

In 2006, long before the current wave of AI, computer science professor Simon Colton of Queen Mary College, London, offered what he called “The Painting Fool,” a program that would produce images via a mix of rules given by the human operator, but also other parameters the human doesn’t explicitly manipulate. Detecting the “sentiment” of a person via sentiment-detection software, for example, the Painting Fool would use the interpretation of that sentiment as one input to the work of art.

Also: The best AI art generators: DALL-E 2 and other fun alternatives to try

In The Guardian newspaper in 2017, Colton told reporter Gemma Kappala-Ramsamy that his purpose was to explore “what it means for software to be creative,” with an aim for “the software itself to be taken seriously as a creative artist in its own right, one day.”

In more recent instances, artists have called attention to things they found in the output of generative AI that they noted were surprising, or pointed to something going on with the program beyond their anticipation, such as the recurring figure of Loab last year.

In Science, Epstein and team refer to a much longer article, posted on the arXiv pre-print server, in which they explain in detail how it’s important not to casually consider the machine autonomous, however impressive its results.

Epstein and team emphasize the choice of discussing generative AI “as a tool to support human creators, rather than an agent capable of harboring its own intent or authorship. In this view, there is little room for autonomous machines being ‘artists’ or ‘creative’ in their own right.”

Also: How to use DALL-E 2 to turn your ideas into AI-generated art

The authors base their point of view on a concept called “meaningful human control,” or, MHC, which, they write, is

“originally adapted from autonomous weapons literature that refers to a human operator’s control over and responsibility for a computational system.”

“MHC is achieved,” they explain, “if human creators can creatively express themselves through the generative system, leading to an outcome that aligns with their intentions and carries their personal, expressive signature.”

Future work is needed, Epstein and colleagues conclude, to find generative systems that afford human users “fine-grained causal manipulation over outputs” when creating works using AI.

[ad_2]

Source link