[ad_1]

Intel on Tuesday launched the latest generation of its deep learning processors for training and inference, Habana Gaudi2 and Habana Greco, making AI more accessible and valuable for its data center customers. At its Intel Vision event, the chipmaker also shared details about its IPU and GPU portfolios, all aimed business customers.

“AI is driving the data center,” Eitan Medina, COO of Habana Labs, Intel’s data center team focused on AI deep learning processor technologies, said to reporters earlier. “It’s the most important application and fastest-growing. But different customers are using different mixes for different applications.”

The varied use cases explain Intel’s investment in a diversity of data center chips. Habana processors are designed for customers that need deep learning compute. The new Gaudi2 processor, for instance, can improve vision modeling of applications used in autonomous vehicles, medical imaging and defect detection in manufacturing. Intel purchased Habana Labs, an Israel-based programmable chipmaker, for approximately $2 billion in 2019.

Both the second-gen Gaudi2 and Greco chips are implemented in 7-nanometer technology, an improvement from 16-nanometer for the first generation. They’re manufactured on Habana’s high-efficiency architecture.

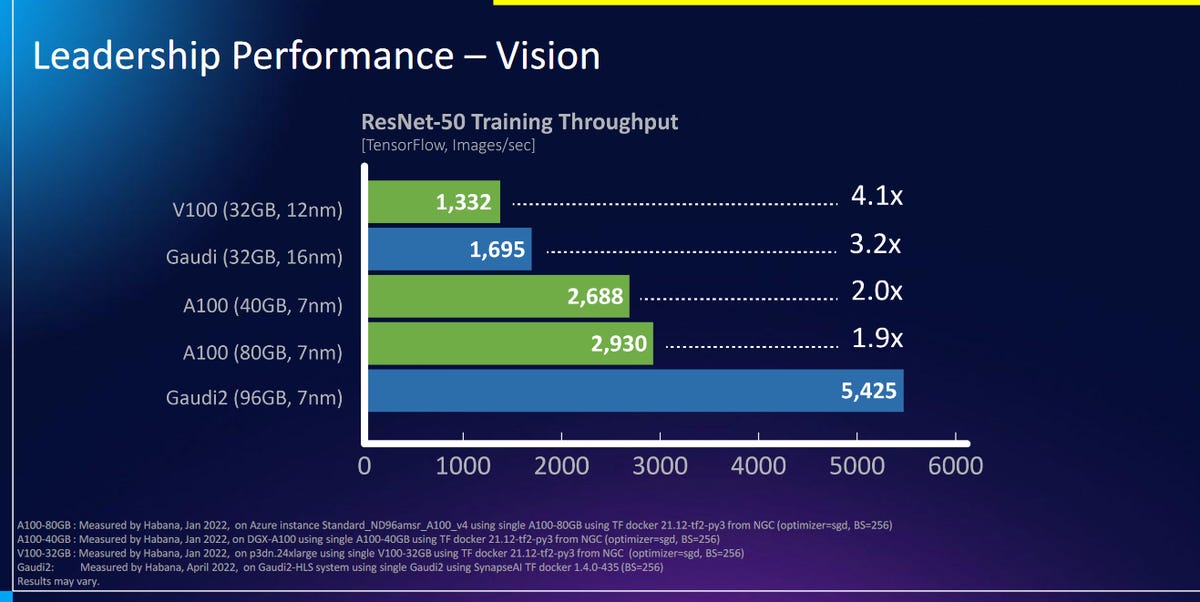

Intel said Tuesday that Gaudi2 delivers twice the training throughput over Nvidia’s A100-80GB GPU for both the ResNet-50 computer vision model and the BERT natural language processing model.

Intel

“Compared with the A100 GPU, implemented in the same process node and roughly the same die size, Gaudi2 delivers clear leadership training performance as demonstrated with apples-to-apples comparison on key workloads,” Medina said in a statement. “This deep-learning acceleration architecture is fundamentally more efficient and backed with a strong roadmap.”

More on the Gaudi2:

- Compared to the first-gen Gaudi, it delivers up to 40% better price performance in the AWS cloud with Amazon EC2 DL1 instances and on-premises with the Supermicro X12 Gaudi Training Server

- introduces an integrated media processing engine for compressed media and offloading the host subsystem

- Gaudi2 triples the in-package memory capacity from 32GB to 96GB of HBM2E at 2.45TB/sec bandwidth

- integrates 24 x 100GbE RoCE RDMA NICs, on-chip, for scaling-up and scaling-out using standard Ethernet

Gaudi2 processors are now available to Habana customers. Habana has partnered with Supermicro to bring the Supermicro X12 Gaudi2 Training Server to market this year.

Meanwhile, the second-gen Greco inference chip will be available to select customers beginning this year’s second half.

More on the second-gen Greco:

- Includes boosted memory on the card, essentially getting 5x the bandwidth and pushing on-chip memory from 50MB to 120MB

- Adds media decoding and processing

- Offers smaller form factor for compute efficiency: from dual-slot FHFL to single-slot

“Gaudi2 can help Intel customers train increasingly large and complex deep learning workloads with speed and efficiency, and we’re anticipating the inference efficiencies that Greco will bring,” Intel EVP Sandra Rivera said in a statement.

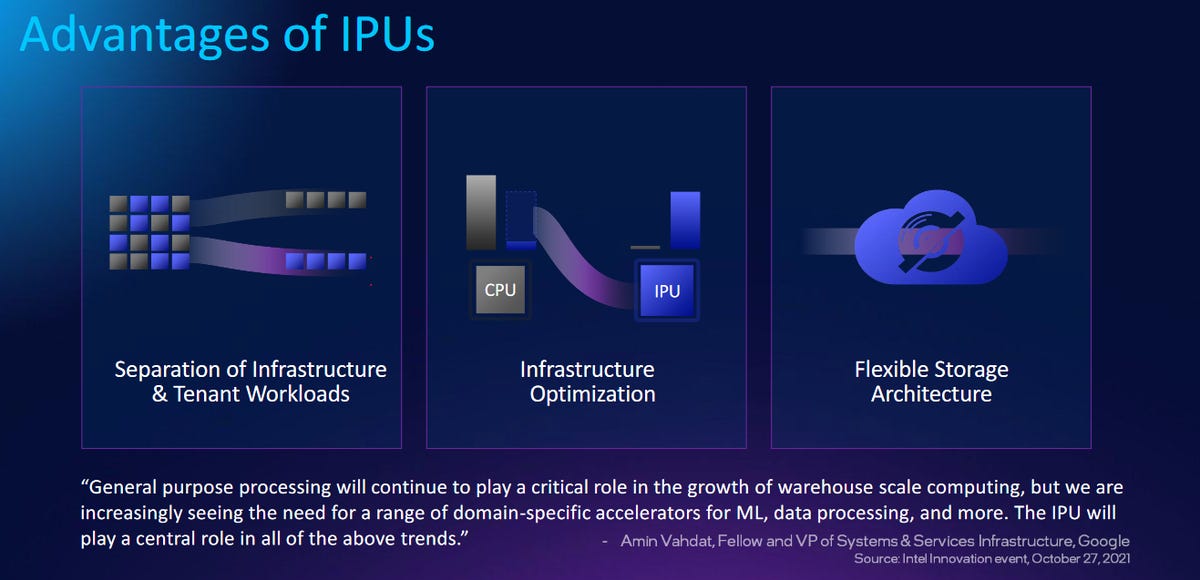

Intel on Tuesday also unveiled an extended roadmap for its Infrastructure Processing Unit (IPU) portfolio. Intel initially built IPUs for cloud giants — hyperscalers like Google and Facebook — but it’s expanding access now.

Intel

Intel is shipping two IPUs next year: the Mount Evans, Intel’s first ASIC IPU, and Oak Springs Canyon, Intel’s second-generation FPGA IPU that’s shipping to Google and other service providers.

In 2023 and 2024, Intel plans to roll out its third-gen, 400GB IPUs — code-named Mount Morgan and Hot Springs Canyon. By 2025 and 2026, Intel expects to ship 800GB of IPUs to customers and partners.

Intel on Tuesday also shared details on its data center GPU, code-named Arctic Sound. Designed for media transcoding, visual graphics and inference in the cloud, Arctic Sound-M (ATS-M) is the industry’s first discrete GPU with an AV1 hardware encoder. It offers performance targeting 150 trillion operations per second (TOPS). ATS-M will be available in two form factors and in more than 15 system designs from partners, including Dell, Supermicro, Inspur, and H3C. It will launch in the third quarter of this year.

[ad_2]

Source link